Reading only a specifed range of records

The File Metadata offers the possibility to define filters, that allows to specify a range of records to process.

This can be useful to define more precisely the records to treat when loading files.

Due to some maintenance operations, stambia.org will be switched to read-only mode during the 13th November. It will be possible to read and download, but impossible to post on the forums or create new accounts. For any question please contact the support team.

The File Metadata offers the possibility to define filters, that allows to specify a range of records to process.

This can be useful to define more precisely the records to treat when loading files.

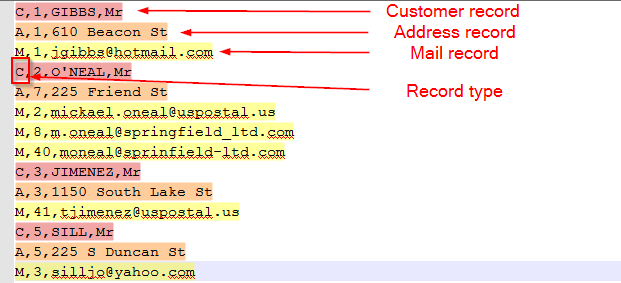

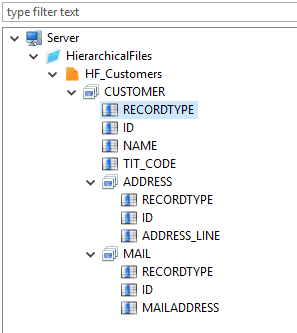

A hierarchical file is a file containing multiple records which can have different structures and can be nested.

Generally, these lines start with a "record type" field which helps recognizing each line type.

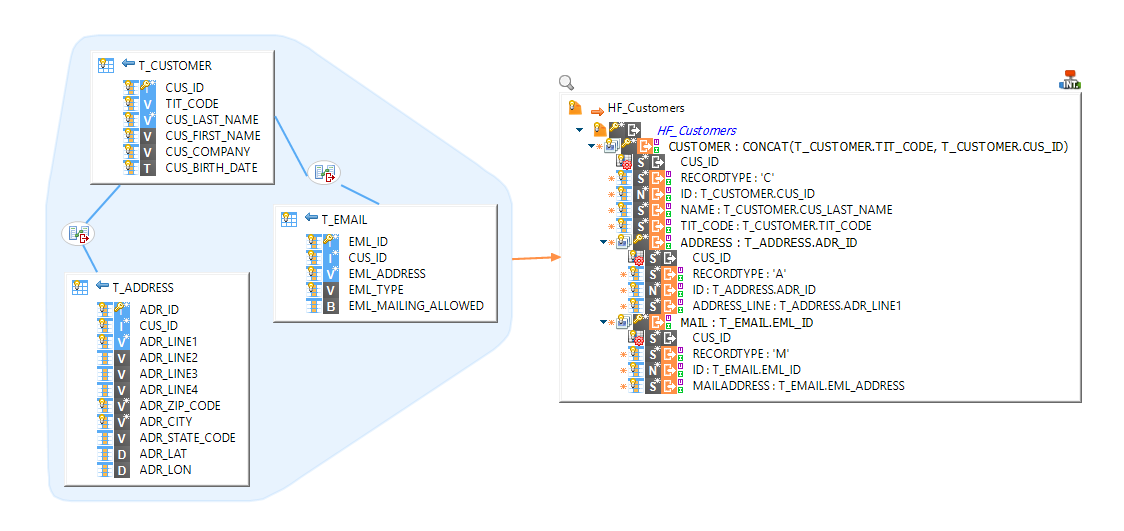

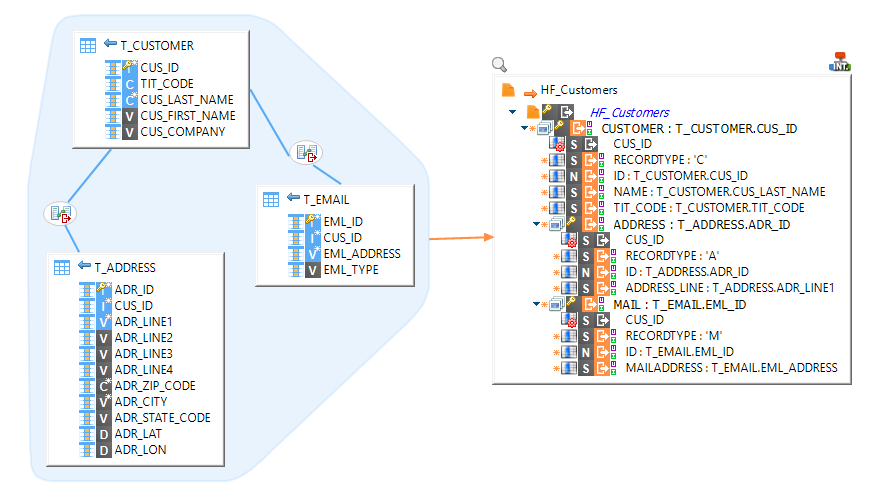

For example, a file storing Customers, their addresses and mails may look like this:

This article demonstrates how to handle this kind of files with Stambia DI.

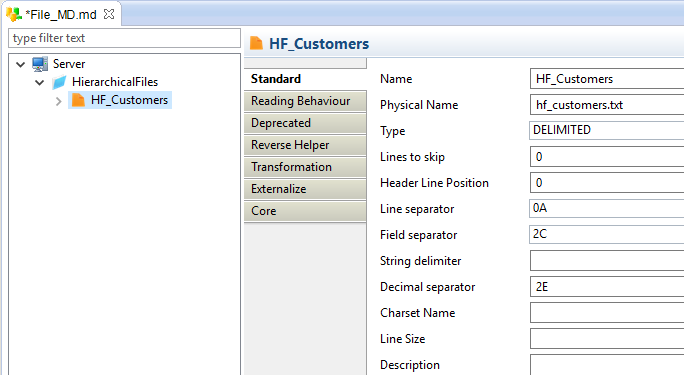

The first step is to create a File Server Metadata, and a directory in it.

Then add a file with New > File on it and configure its properties.

Example for our file which is a DELIMITED file with a comma as field separator:

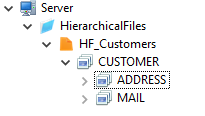

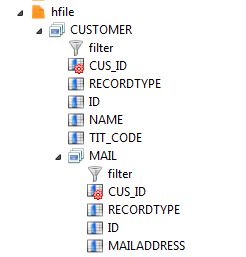

Next, create the record structure in a hierarchical way by adding new records with New > Record

Finally, add the records' fields with New > Field

Note:

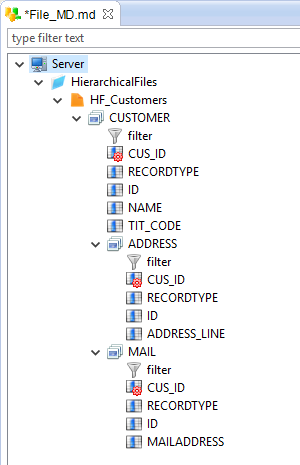

The next step is to add a filter on each record with New -> Filter

The filters will be used at reading, to differentiate the different records.

They are required if you plan to read the file (using it as source in Mappings, etc...), otherwise, Stambia has no way to differentiate the records.

Each filter must be configured to test the value of the field that permits to recognize the record.

For our example, it is the RECORDTYPE field, which is the first character of the line (Starts At 1, Length 1).

For customer records, the value of RECORDTYPE is 'C' (for address 'A', ...).

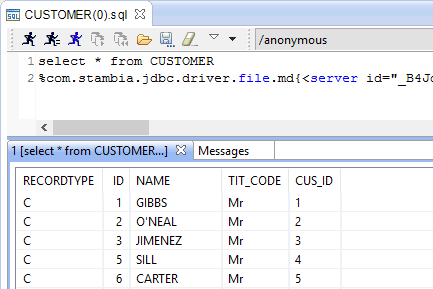

If the file exists on your machine, you can verify if a filter is correctly configured by consulting the record's data with Right click > Consult data on it.

The generated request should return the customers lines:

The last step is to add computed fields, if necessary.

Computed fields are specific fields that are calculated at execution, when the file is read.

They are very useful to simulate a Primary Key/Foreign Key between records for joining records in mappings for example.

Please refer to this article to learn how to use computed fields.

We are going to use them on our file for this purpose:

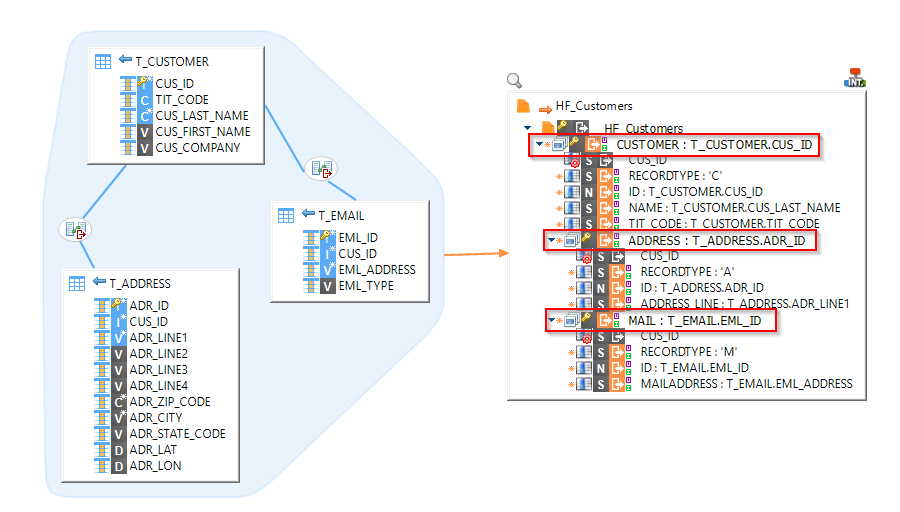

To load a hierarchical file:

Action Process INTEGRATION Hierarchical File

Note:

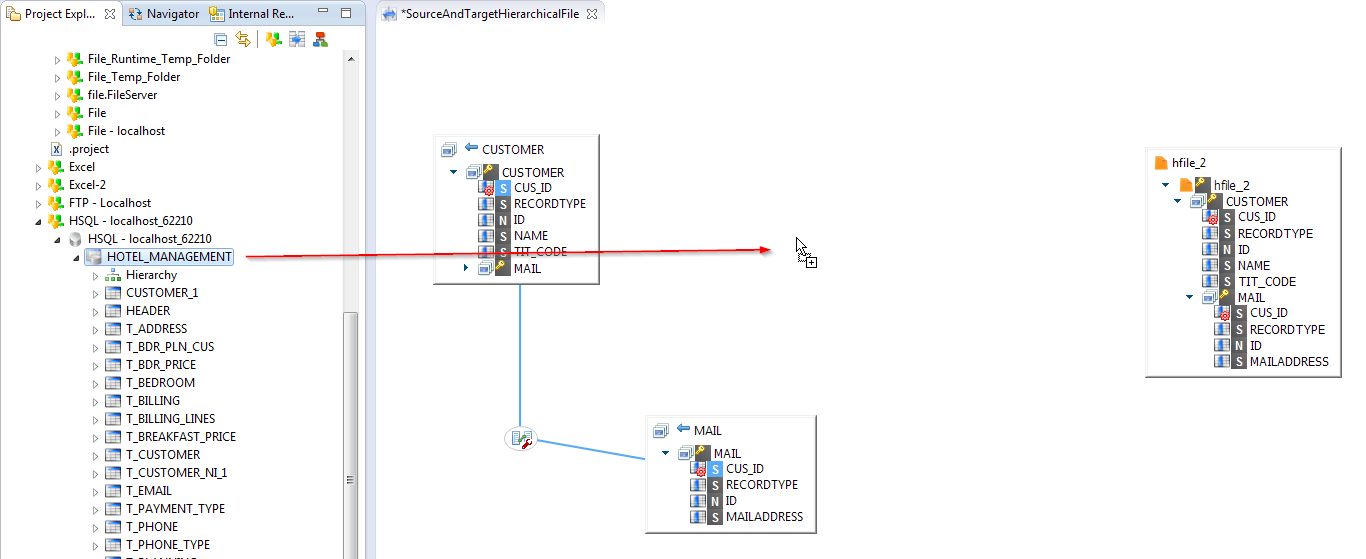

To use a hierarchical file as source, create a Mapping.

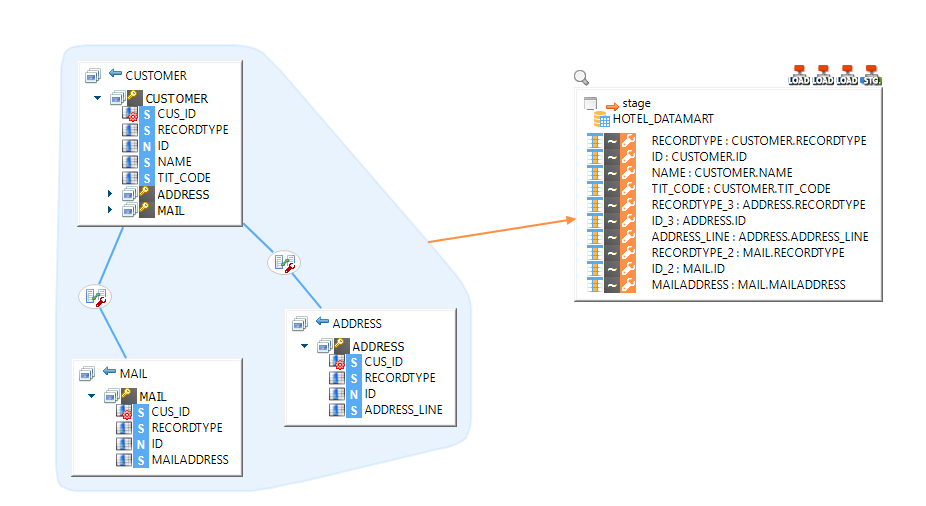

Next, drag and drop all the needed source records/sub-records from the hierarchical file. It is not possible to use fields from sub-records directly, they must be instantiated on the mapping:

As you can see, the MAIL and ADDRESS records are instantiated on the mapping, and joined with the help of the computed fields.

For the example, we only load a stage table but it can be any RDBMS table.

If you want to load another file directly from a hierarchical file, you'll have to go through a stage, like explained in this article.

When working with files, Stambia offers the possibility to create and use Computed Fields.

These particular fields, on which an expression is configured, are calculated at execution when the file is read.

It can be useful to simulate a Primary/Foreign Key for example in hierarchical files.

Note that Computed Fields should be used only for reading. They are "virtual" fields, they do not exist on the file, and the calculated values can vary at each execution.

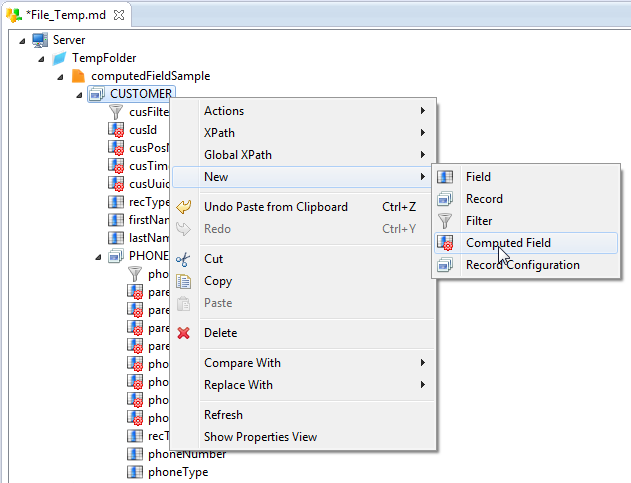

To create Computed Fields, Right click on a file or record and choose New > Computed Field

Then fill the parameters corresponding to your needs.

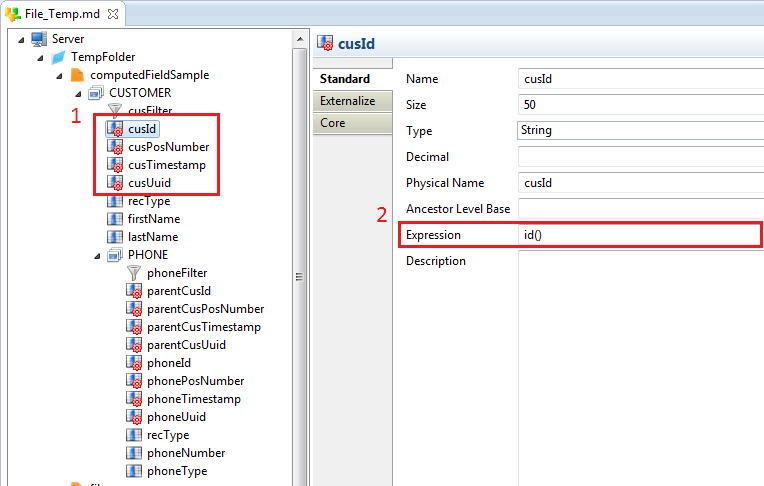

1 - Computed Fields have a different icon, to differentiate it from the normal fields.

2 - The Expression is the function which will be used to calculate the field.

The available functions are:

| id() | Generates an ID |

| localPosition() | Indicate the record/row number |

| timestamp() | Generates a timestamp |

| uuid() | Generates an UUID |

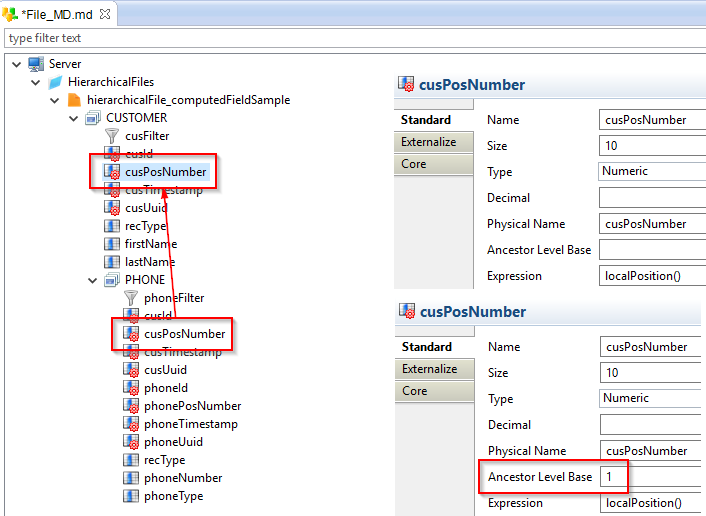

It is possible to make "links" between Computed Fields, like a Primary/Foreign key would do in a table.

For that, the Ancestor Level Base parameter has to be set.

Example:

The two fields have the expression set to localPosition() and the cusPosNumber in the sub-record has the Ancestor Level Base parameter set to 1.

With this settings, each Phone of the same customer will have the same cusPosNumber, like a table Foreign Key would do.

Note:

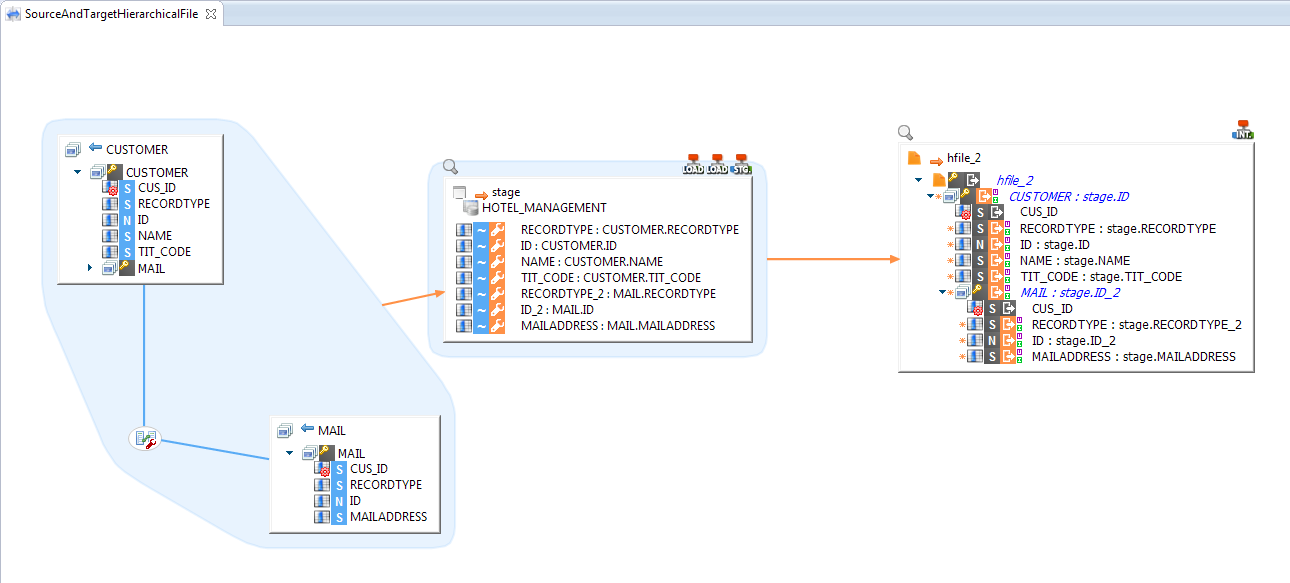

During the integration process, Stambia creates and uses temporary tables to load and integrate the data into the target. But, when using files as source and target, these tables cannot be created, as no databases are used.

This article demonstrates a solution to create a mapping to load data when the source and target are both hierarchical files.

The structure of our files looks like this :

We are using here computed fields to simulate a Primary/Foreign key between the record and sub-records. See this article for more informations about computed fields.

Since the S18 Stambia Designer, it is possible to make multi-target mappings, and create stages. The creation of stages is a powerfull fonctionnality which permits to have a temporary place in a mapping (in which we can make operations on data before insertion, like unions, minus, ...).

We are going to use this to accomplish our goal.

Note that even if stages are only available since the S18 Designer, older runtimes can understand and execute the processes generated in S18. It is so possible to use the comfort of S18 without having to upgrade the runtimes.

To use a stage, drag and drop a database schema in which you want the temporary tables used by the stage to be created.

Note

In a mapping all source records have to be instanciated. It is not actually possible to map fields of the sub-records, like the MAIL one, directly from the CUSTOMER main record.

The sub-records have to be put on the mapping and joined. We used here the computed fields to make the join.

Now, we can drag and drop on the stage the fields from the sources we want to use, and then map them to the target :

Note

The Record Keys on the target have to be mapped.

The S17 Designer doesn't permit to use stages and multi-target. The solution is to create a table on a database and separate the process in two mappings.

Mapping 1 : Source File > Table

Mapping 2 : Table > Target File

A process can then be created to execute the two mappings.

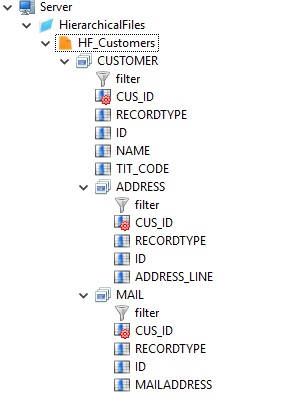

This article explains how to make an order by in a mapping when loading hierarchical files.

The hierarchical file we are loading looks like this:

See this article if you need help to make it.

Our goal is to order the data by TIT_CODE when loading the file from the demo database.

The mapping is very similar to what we would usually do when loading a hierarchical file.

The source fields are mapped to the target and all the records are mapped with a repetition key.

Now, to order the customers by TIT_CODE, we are going to modify the expression of the customer record node.

The idea is to concatenate all the columns we would have put in an ORDER BY SQL Query.

We set it here to CONCAT(T_CUSTOMER.TIT_CODE, T_CUSTOMER.CUS_ID).

The data will then be ordered by TIT_CODE and CUS_ID