Loading data into Redshift via S3 buckets

When you need to load data from your local databases to Amazon Redshift, Stambia DI lets you design a simple mapping which will take care of the following steps automatically:

1. Export data from your database to a file

2. Send the file to an Amazon S3 bucket

3. Load the S3 file into Redshift

This article shows how to create the required metadata and how to design the mapping.

Pre-requisites

Please make sure that you have installed the Amazon components as indicated in this article.

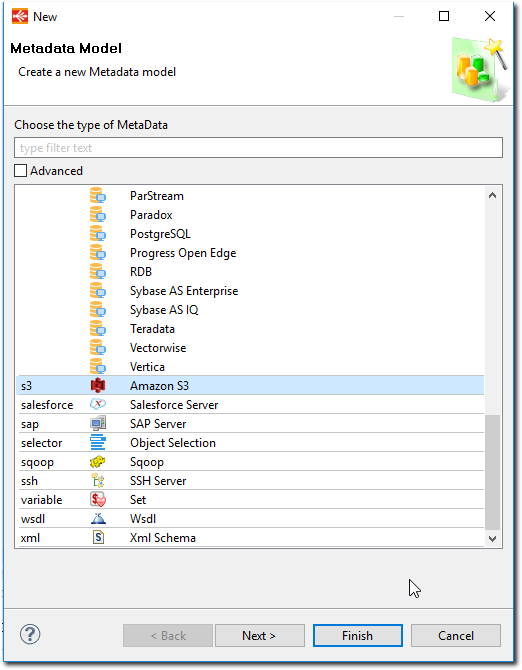

Creating the Amazon S3 metadata

Simply create an Amazon S3 metadata object:

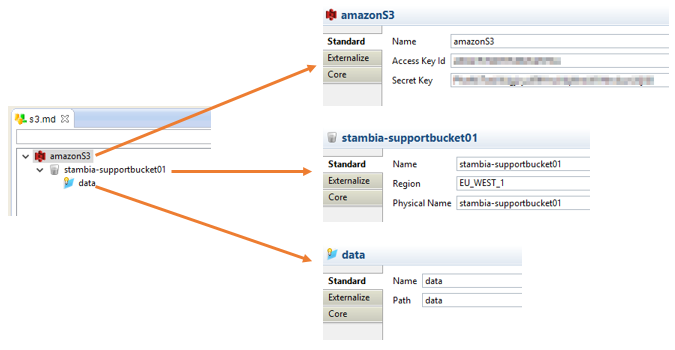

And right click to add a bucket and a folder. You can configure each node:

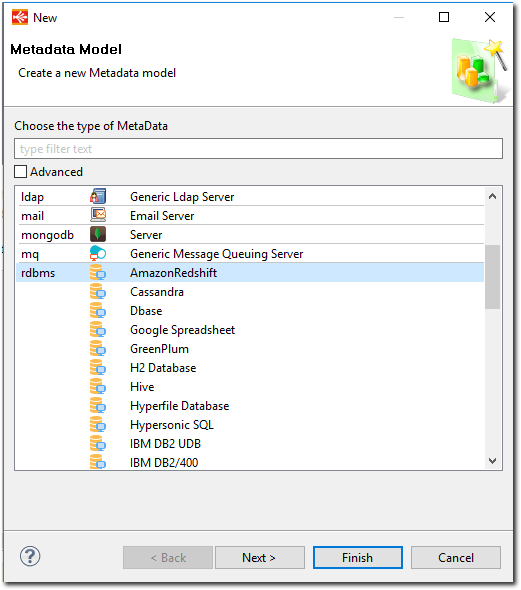

Creating the Amazon Redshift metadata

Select the rdbms.AmazonRedshift metadata model:

Fill in the Jdbc URL and credentials, and reverse your Redshift database schema as you would do with any Postgresql database.

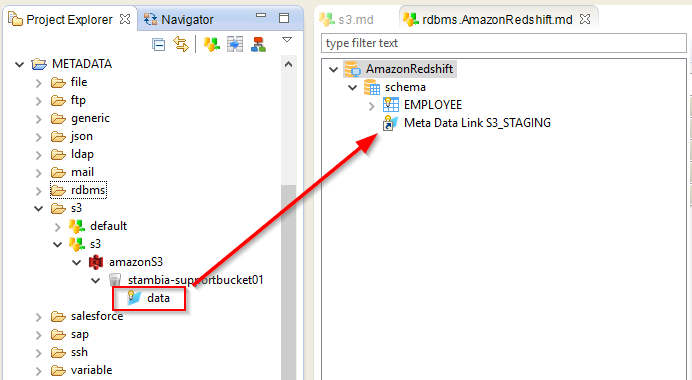

Once this is done, drag and drop the S3 Folder into the Redshift schema, like this:

And rename this node as "S3_STAGING". The Load templates will need this name to connect to this folder.

Designing the mapping

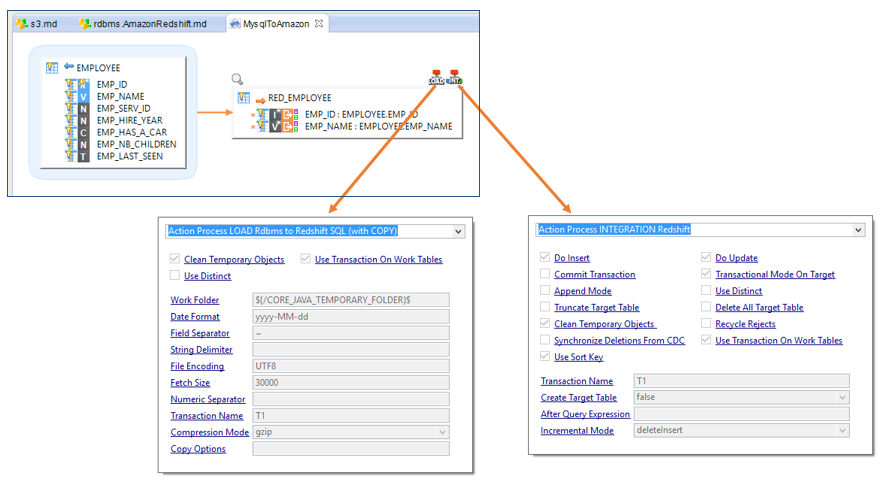

Simply design your mapping as usual, using your Redshift table as a target. And make sure that the "LOAD Rdbms to Redshift SQL (with COPY)" and "INTEGRATION Redshift" template are selected: